Corporate pensions company Scottish Widows to lobby for specific exemptions from the General Data Protection Regulation ahead of EU initiative’s May 2018 introduction.

Scottish Widows seeks derogations in relation to communicating with its customers in order to “bring people to better outcomes.”

The Lloyds Banking Group subsidiary Scottish Widows, the 202-year old life, pensions and investment company based in Edinburgh, has called for derogations from the GDPR.

A great deal has been written across the Internet about the impending GDPR, and much of the information available is contradictory. In fact many organisations and companies have been at pains to work out what exactly will be expected of them come May 2018. While it is true that the GDPR will substantially increase policy enforcers’ remits for penalising breaches of data protection law, the decontextualized figure of monetary penalties reaching €20 million or 4% of annual global turnover – while accurate in severe cases – has become something of a tub-thump for critics of the regulation.

Nevertheless, the GDPR is the most ambitious and widescale attempt to secure individual privacy rights in a proliferating global information economy to date, and organisations should be preparing for compliance. But the tangible benefits from consumer and investor trust provided by data compliance should always be kept in sight. There is more information about the GDPR on this blog and the Data Compliant main site.

Certain sectors will feel the effects of GDPR – in terms of the scale of work to prepare for compliance – more than others. It is perhaps understandable, therefore, why Scottish Widows, whose pension schemes may often be supplemented by semi-regular advice and contact, would seek derogations from the GDPR’s tightened conditions for proving consent to specific types of communications. Since the manner in which consent to communicate with their customers was acquired by Scottish Widows will not be recognised under the new laws, the company points out that “in future we will not be able to speak to old customers we are currently allowed to speak to.”

Scottish Widows’ head of policy, pensions and investments Peter Glancy’s central claim is that “GDPR means we can’t do a lot of things that you might want to be able to do to bring people to better outcomes.”

Article 23 of the GDPR enables legislators to provide derogations in certain circumstances. The Home Office and Department of Health for instance have specific derogations so as not to interfere with the safeguarding of public health and security. Scottish Widows cite the Treasury’s and DWP’s encouragement of increased pension savings, and so it may well be that the company plans to lobby for specific exemptions on the grounds that, as it stands, the GDPR may put pressure on the safeguarding of the public’s “economic or financial interests.”

Profiling low income workers and vulnerable people for marketing purposes in gambling industry provokes outrage and renewed calls for reform.

The ICO penalised charities for “wealth profiling”. Gambling companies are also “wealth profiling” in reverse – to target people on low incomes who can ill afford to play

If doubts remain that the systematic misuse of personal data demands tougher data protection regulations, these may be dispelled by revelations that the gambling industry has been using third party affiliates to harvest data so that online casinos and bookmakers can target people on low incomes and former betting addicts.

An increase in the cost of gambling ads has prompted the industry to adopt more aggressive marketing and profiling with the use of data analysis. An investigation by the Guardian including interviews with industry and ex-industry insiders describes a system whereby data providers or ‘data houses’ collect information on age, income, debt, credit information and insurance details. This information is then passed on to betting affiliates, who in turn refer customers to online bookmakers for a fee. This helps the affiliates and the gambling firms tailor their marketing to people on low incomes, who, according to a digital marketer, “were among the most successfully targeted segments.”

The data is procured through various prize and raffle sites that prompt participants to divulge personal information after a lengthy terms and conditions that marketers in the industry suspect serves only to obscure to many users how and where the data will be transferred and used.

This practice, which enables ex-addicts to be tempted back into gambling by the offer of free bets, has been described as extremely effective. In November last year, the Information Commissioner’s Office (ICO) targeted more than 400 companies after allegations the betting industry was sending spam texts (a misuse of personal data). But it is not mentioned that any official measures were taken after the investigations, which might have included such actions as a fine of £500,000 under the current regulations. Gambling companies are regulated by the slightly separate Gambling Commission, who seek to ensure responsible marketing and practice. But under the GDPR it may well be that the ICO would have licence to take a much stronger stance against the industry’s entrenched abuse of personal information to encourage problem gambling.

Latest ransomware attack on health institution affects Scottish health board, NHS Lanarkshire.

According to the board, a new variant of the malware Bitpaymer, different to the infamous global WannaCry malware, infected its network and led to some appointment and procedure cancellations. Investigations are ongoing into how the malware managed to infect the system without detection.

Complete defence against ransomware attacks is problematic for the NHS because certain vital life-saving machinery and equipment could be disturbed or rendered dysfunctional if the NHS network is changed too dramatically (i.e. tweaked to improve anti-virus protection).

A spokesman for the board’s IT department told the BBC, “Our security software and systems were up to date with the latest signature files, but as this was a new malware variant the latest security software was unable to detect it. Following analysis of the malware our security providers issued an updated signature so that this variant can now be detected and blocked.”

Catching the hackers in the act

Attacks on newly-set up online servers start within just over one hour, and are then subjected to “constant” assault.

According to an experiment conducted by the BBC, cyber-criminals start attacking newly set-up online servers about an hour after they are switched on.

The BBC asked a security company, Cybereason, to carry out to judge the scale and calibre of cyber-attacks that firms face every day. A “honeypot” was then set up, in which servers were given real, public IP addresses and other identifying information that announced their online presence, each was configured to resemble, superficially at least, a legitimate server. Each server could accept requests for webpages, file transfers and secure networking, and was accessible online for about 170 hours.

They found that that automated attack tools scanned such servers about 71 minutes after they were set up online, trying to find areas they could exploit. Once the machines had been found by the bots, they were subjected to a “constant” assault by the attack tools.

Vulnerable people’s personal information exposed online for five years

Vulnerable customers’ personal data needs significant care to protect the individuals and their homes from harm

Nottinghamshire County Council has been fined £70,000 by the Information Commissioner’s Office for posting genders, addresses, postcodes and care needs of elderly and disabled people in an online directory – without basic security or access restrictions such as a basic login requiring username or password. The data also included details of the individuals’ care needs, the number of home visits per day and whether they were or had been in hospital. Though names were not included on the portal, it would have taken very little effort to identify the individuals from their addresses and genders.

This breach was discovered when a member of the public was able to access and view the data without any need to login, and was concerned that it could enable criminals to target vulnerable people – especially as such criminals would be aware that the home would be empty if the occupant was in hospital.

The ICO’s Head of Enforcement, Steve Eckersley, stated that there was no good reason for the council to have overlooked the need to put robust measures in place to protect the data – the council had financial and staffing resources available. He described the breach as “serious and prolonged” and “totally unacceptable and inexcusable.”

The “Home Care Allocation System” (HCAS) online portal was launched in July 2011, to allow social care providers to confirm that they had capacity to support a particular service user. The breach was reported in June 2016, and by this time the HCAS system contained a directory of 81 service users. It is understood that the data of 3,000 people had been posted in the five years the system was online.

Not surprisingly, the Council offered no mitigation to the ICO. This is a typical example of where a Data Privacy Impact Assessement will be mandated under GDPR.

Harry Smithson, 6th September 2017

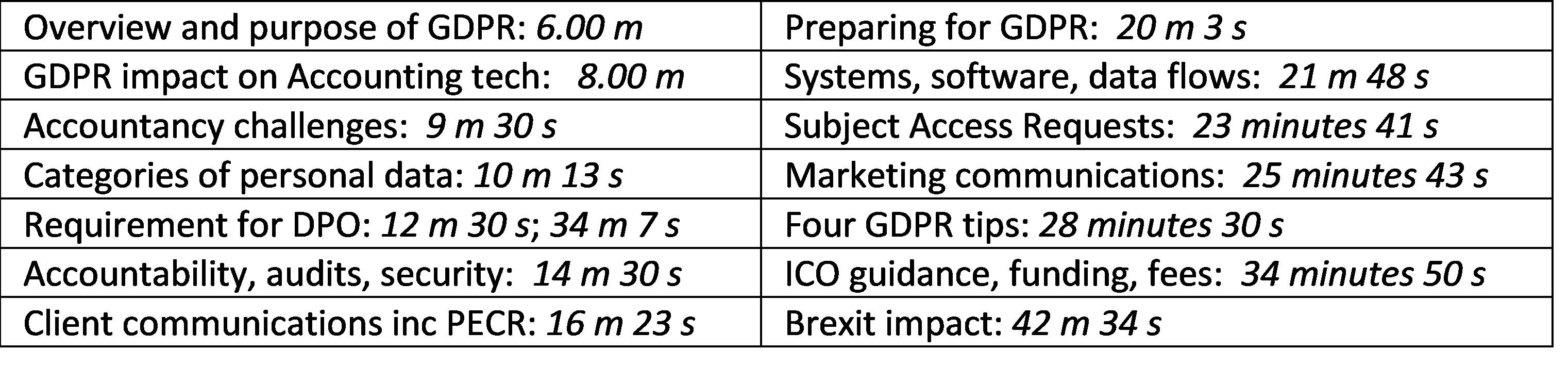

GDPR Debate

GDPR Debate Data Compliant is working with its clients to help them prepare for GDPR, so if you are concerned about how GDPR will affect your firm or business, feel free to give us a call and have a chat on 01787 277742 or email dc@datacompliant.co.uk if you’d like more information.

Data Compliant is working with its clients to help them prepare for GDPR, so if you are concerned about how GDPR will affect your firm or business, feel free to give us a call and have a chat on 01787 277742 or email dc@datacompliant.co.uk if you’d like more information.