When are they needed? How are they done?

Next year under the new GDPR data protection legislation, Privacy Impact Assessments will become known as Data Privacy Impact Assessments, and will be mandatory instead of merely recommended.

The ICO currently describes PIAs as “a tool which can help organisations identify the most effective way to comply with their data protection obligations and meet individuals’ expectations of privacy.”

While the soon-to-be-rechristened DPIAs will be legally required, data controllers should continue to fully embrace these opportunities to ensure that heavy fines, brand reputational damage and the associated risks of data breaches can be averted from an early stage in any planned operation.

When will a DPIA be legally required?

Organisations will be required to carry out a DPIA when data processing is “likely to result in a high risk to the rights and freedoms of individuals.” This can be during an existing or before a planned project involving data processing that comes with a risk to the rights of individuals as provided by the Data Protection Act. They can also range in scope, depending on the organisation and the scale of its project.

DPIAs will therefore be required when an organisation is planning an operation that could affect anyone’s right to privacy: broadly speaking, anyone’s right ‘to be left alone.’ DPIAs are primarily designed to allow organisations to avoid breaching an individual’s freedom to “control, edit, manage or delete information about themselves and to decide how and to what extent such information is communicated to others.” If there is a risk of any such breach, a DPIA must be followed through.

Listed below are examples of projects, varying in scale, in which the current PIA is advised – and it is safe to assume all of these examples will necessitate a DPIA after the GDPR comes into force:

- A new IT system for storing and accessing personal data.

- A new use of technology such as an app.

- A data sharing initiative where two or more organisations (even if they are part of the same group company) seek to pool or link sets of personal data.

- A proposal to identify people in a particular group or demographic and initiate a course of action.

- Processing quantities of sensitive personal data

- Using existing data for a new and unexpected or more intrusive purpose.

- A new surveillance system (especially one which monitors members of the public) or the application of new technology to an existing system (for example adding Automatic number plate recognition capabilities to existing CCTV).

- A new database which consolidates information held by separate parts of an organisation.

- Legislation, policy or strategies which will impact on privacy through the collection of use of information, or through surveillance or other monitoring

How is a DPIA carried out?

There are 7 main steps that comprise a DPIA:

- Identify the need for a DPIA

This will mainly involve answering ‘screening questions,’ at an early stage in a project’s development, to identify the potential impacts on individuals’ privacy. The project management should begin to think about how they can address these issues, while consulting with stakeholders.

- Describe the information flows

Explain how information will be obtained, used and retained. This part of the process can identify the potential for – and help to avoid – ‘function creep’: when data ends up being processed or used unintentionally, or unforeseeably.

- Identify the privacy and related risks

Compile a record of the risks to individuals in terms of possibly intrusions of data privacy as well as corporate risks or risks to the organisation in terms of regulatory action, reputational damage and loss of public trust. This involves a compliance check with the Data Protection Act and the GDPR.

- Identify and evaluate the privacy solutions

With the record of risks ready, devise a number of solutions to eliminate or minimise these risks, and evaluate the costs and benefits of each approach. Consider the overall impact of each privacy solution.

- Sign off and record the DPIA outcomes

Obtain appropriate sign-offs and acknowledgements throughout the organisation. A report based on the findings and conclusions of the prior steps of the DPIA should be published and accessible for consultation throughout the project.

- Integrate the outcomes into the project plan

Ensure that the DPIA is implemented into the overall project plan. The DPIA should be utilised as an integral component throughout the development and execution of the project.

- Consult with internal and external stakeholders as needed throughout the process

This is not a ‘step’ as such, but an ongoing commitment to stakeholders to be transparent about the process of carrying out the DPIA, and being open to consultation and the expertise and knowledge of the organisation’s various stakeholders – from colleagues to customers. The ICO explains, “data protection risks are more likely to remain unmitigated on projects which have not involved discussions with the people building a system or carrying out procedures.”

DPIAs – what are the benefits?

There are benefits to DPIAs for organisations who conduct them. Certainly there are cost benefits to be gained from knowing the risks before starting work:

- cost benefits from adopting a Privacy by Design approach: knowing the risks before starting work allows issues to be fixed early, resulting in reduced development costs and delays to the schedule

- risk mitigation in relation to fines and loss of sales caused by lack of customer and/or shareholder confidence

- reputational benefits and trust building from being seen to consider and embed privacy issues into a programme’s design from the outset

For more information about DPIAs and how Data Compliant can help, please email dc@datacompliant.co.uk.

Harry Smithson 20th July 2017

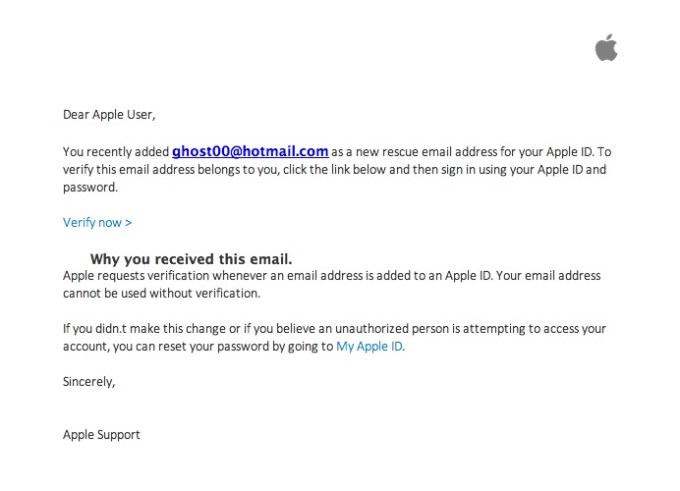

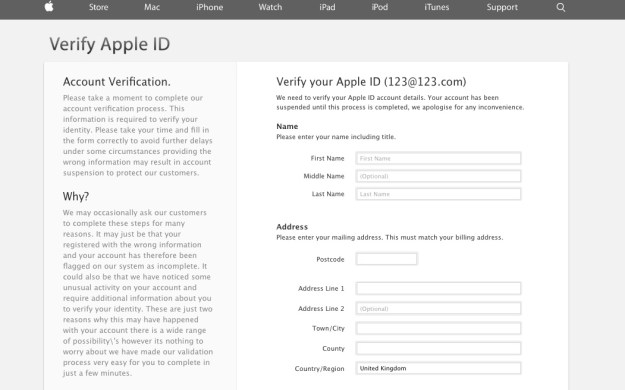

There I was, at my desk on Monday morning, preoccupied with getting everything done before the Christmas break, and doing about 3 things at once (or trying to). An email hit my inbox with the subject “your account information has been changed”. Because I regularly update all my passwords, I’m used to these kinds of emails arriving from different companies – sometimes to remind me that I’ve logged in on this or that device, or to tell me that my password has been changed, and to check that I the person who actually changed it.

There I was, at my desk on Monday morning, preoccupied with getting everything done before the Christmas break, and doing about 3 things at once (or trying to). An email hit my inbox with the subject “your account information has been changed”. Because I regularly update all my passwords, I’m used to these kinds of emails arriving from different companies – sometimes to remind me that I’ve logged in on this or that device, or to tell me that my password has been changed, and to check that I the person who actually changed it.

The Investigatory Powers Bill, also known as the Snoopers’ Charter, was passed by the House of Lords last week. This means that service providers will now need to keep – for 12 months – records of every website you visit, (not the exact URL but the website itself), every phone call you make, how long each call lasts, including dates and times the calls were made. They will also track the apps you use on your phone or tablet.

The Investigatory Powers Bill, also known as the Snoopers’ Charter, was passed by the House of Lords last week. This means that service providers will now need to keep – for 12 months – records of every website you visit, (not the exact URL but the website itself), every phone call you make, how long each call lasts, including dates and times the calls were made. They will also track the apps you use on your phone or tablet.

A Historical Society (the ICO haven’t released the name of which one) has been fined after a laptop was stolen, holding sensitive personal data on those who had donated or loaned artefacts. The laptop was unencrypted and the ICO found that there were no policies in place when it came to encryption or homeworking. The organisation was fined just £500 to be reduced to £400 if paid early. Not much of a punishment if you ask me – who doesn’t encrypt sensitive personal data now-a-days??

A Historical Society (the ICO haven’t released the name of which one) has been fined after a laptop was stolen, holding sensitive personal data on those who had donated or loaned artefacts. The laptop was unencrypted and the ICO found that there were no policies in place when it came to encryption or homeworking. The organisation was fined just £500 to be reduced to £400 if paid early. Not much of a punishment if you ask me – who doesn’t encrypt sensitive personal data now-a-days??