Many business activities these days will entail significant amounts of data processing and transference. It’s not always clear-cut as to what your organisation does that legally requires, or does not legally require, an impact assessment on the use of personal data – i.e. a Data Protection Impact Assessment (DPIA).

People may be familiar with Privacy Impact Assessments (PIAs), which were advised as best-practice by the Information Commissioner before the EU’s GDPR made DPIAs mandatory for certain activities. Now the focus is not so much on the obligation to meet individuals’ privacy expectations, but on the necessity to safeguard everyone’s data protection rights.

DPIAs are crucial records to demonstrate compliance with data protection law. In GDPR terms, they are evidence of transparency and accountability. They protect your clients, your staff, your partners and any potential third parties. Being vigilant against data protection breaches is good for everyone – with cybercrime on the rise, it’s important that organisations prevent unscrupulous agents from exploiting personal information.

In this blog, we’ll go through a step-by-step guide for conducting a DPIA. But first, let’s see what sort of things your organisation might be doing that need a DPIA.

When is a DPIA required?

The regulations are clear: DPIAs are mandatory for data processing that is “likely to result in a high risk to the rights and freedoms” of individuals. This can be during a current activity, or before a planned project. DPIAs can range in scope, relative to the scope of the processing.

Here are some examples of projects when a DPIA is necessary:

- A new IT system for storing and accessing personal data;

- New use of technology such as an app;

- A data sharing initiative in which two or more organisations wish to pool or link sets of personal data;

- A proposal to identify people of a specific demographic for the purpose of commercial or other activities;

- Using existing data for a different purpose;

- A new surveillance system or software/hardware changes to the existing system; or

- A new database that consolidates information from different departments or sections of the organisation.

The GDPR also has a couple more conditions for a DPIA to be mandatory, namely:

- Any evaluation you make based on automated processing, including profiling, as well as automated decision-making especially if this can have significant or legal consequences for someone; and

- The processing of large quantities of special category personal data (formerly known as sensitive personal data).

An eight-step guide to your DPIA

- Identify the need for a DPIA

- Looking at the list above should give you an idea of whether a DPIA will be required. But there are also various ‘screening questions’ that should be asked early on in a project’s development. Importantly, the data protection team should assess the potential impacts on individuals’ privacy rights the project may have. Internal stakeholders should also be consulted and considered.

- Describe the data flows

- Explain how information will be collected, used and stored. This is important to redress the risk of ‘function creep,’ i.e. when data ends up getting used for different purposes, which may have unintended consequences.

- Identify privacy and related risks

- Identify and record the risks that relate to individuals’ privacy, including clients and staff.

- Also identify corporate or organisational risks, for example the risks of non-compliance, such as fines, or a loss of customers’ trust. This involves a compliance check with the Principles of the Data Protection Act 2018 (the UK’s GDPR legislation).

- Identify and evaluate privacy solutions

- With the risks recorded, find ways to eliminate or minimise these risks. Consider doing cost/benefit analyses of each possible solution and consider their overall impact.

- Sign off and record DPIA outcomes

- Obtain the appropriate sign-off and acknowledgements throughout your organisation. A record of your DPIA evaluations and decisions should be made available for consultation during and after the project.

- Consult with internal and external stakeholders throughout the project

- This is not so much a step as an ongoing process. Commit to being transparent with stakeholders about the DPIA process. Listen to what your stakeholders have to say and make use of their expertise. This can include both employees as well as customers. Being open to consultation with clear communication channels for stakeholders to bring up data protection concerns or ideas will be extremely useful.

- Ongoing monitoring

- The DPIA’s results should be fed back into the wider project management process. You should take the time to make sure that each stage of the DPIA has been implemented properly, and that the objectives are being met.

- Remember – if the project changes in scope, or its aims develop in the project lifestyle, you may need to revisit step one and make the appropriate reassessments.

This brief outline should help you to structure as well as understand the appropriateness of DPIAs. Eventually, these assessment processes will be second nature and an integral part of your project management system. Good luck!

If you have any questions about the data protection, please contact us via email team@datacompliant.co.uk or call 01787 277742

Harry Smithson, 21st October 2019

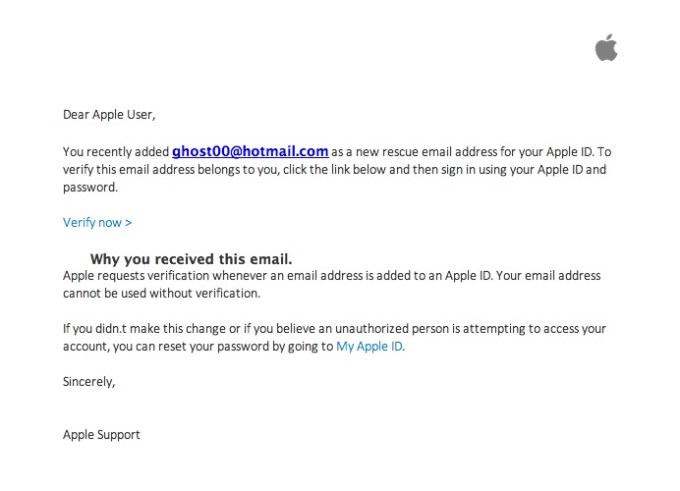

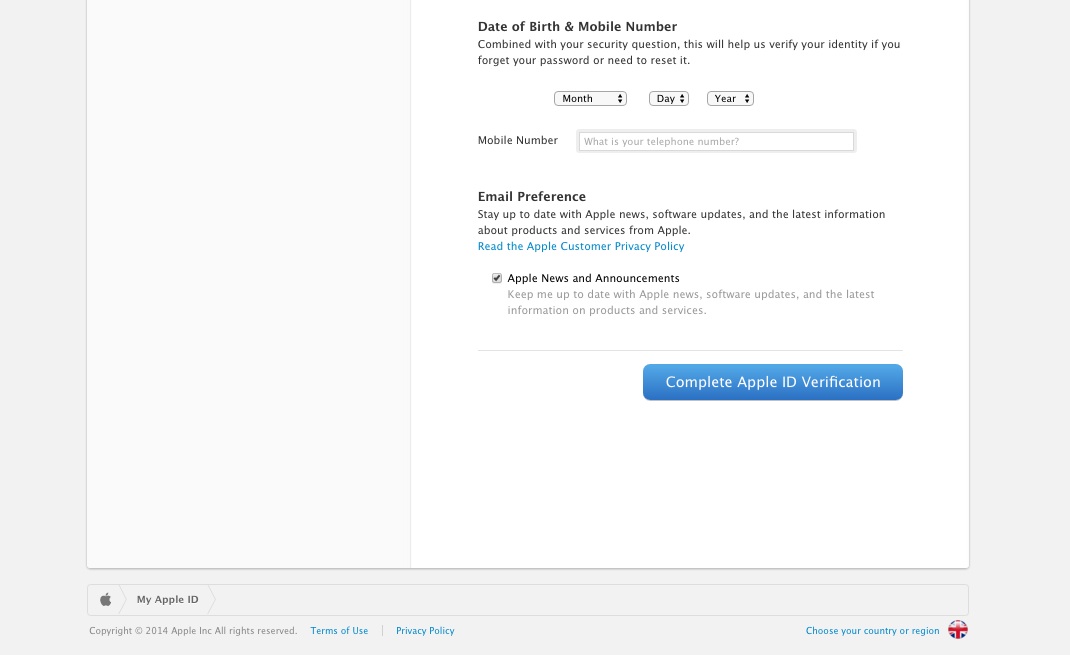

There I was, at my desk on Monday morning, preoccupied with getting everything done before the Christmas break, and doing about 3 things at once (or trying to). An email hit my inbox with the subject “your account information has been changed”. Because I regularly update all my passwords, I’m used to these kinds of emails arriving from different companies – sometimes to remind me that I’ve logged in on this or that device, or to tell me that my password has been changed, and to check that I the person who actually changed it.

There I was, at my desk on Monday morning, preoccupied with getting everything done before the Christmas break, and doing about 3 things at once (or trying to). An email hit my inbox with the subject “your account information has been changed”. Because I regularly update all my passwords, I’m used to these kinds of emails arriving from different companies – sometimes to remind me that I’ve logged in on this or that device, or to tell me that my password has been changed, and to check that I the person who actually changed it.